在Kubernetes中部署zookeeper集群

部署遇到的问题

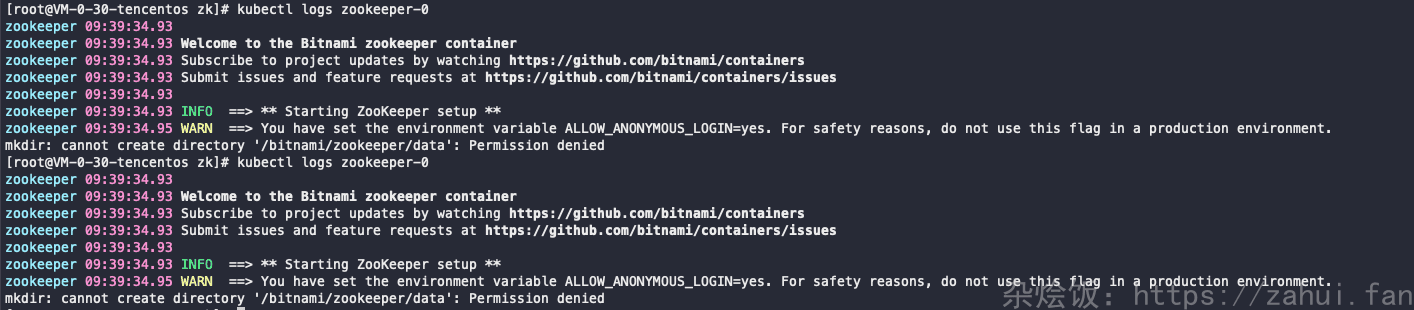

挂载目录没有写入权限

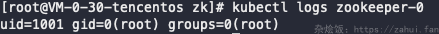

修改容器启动命令,查看到用户 id 是 1001

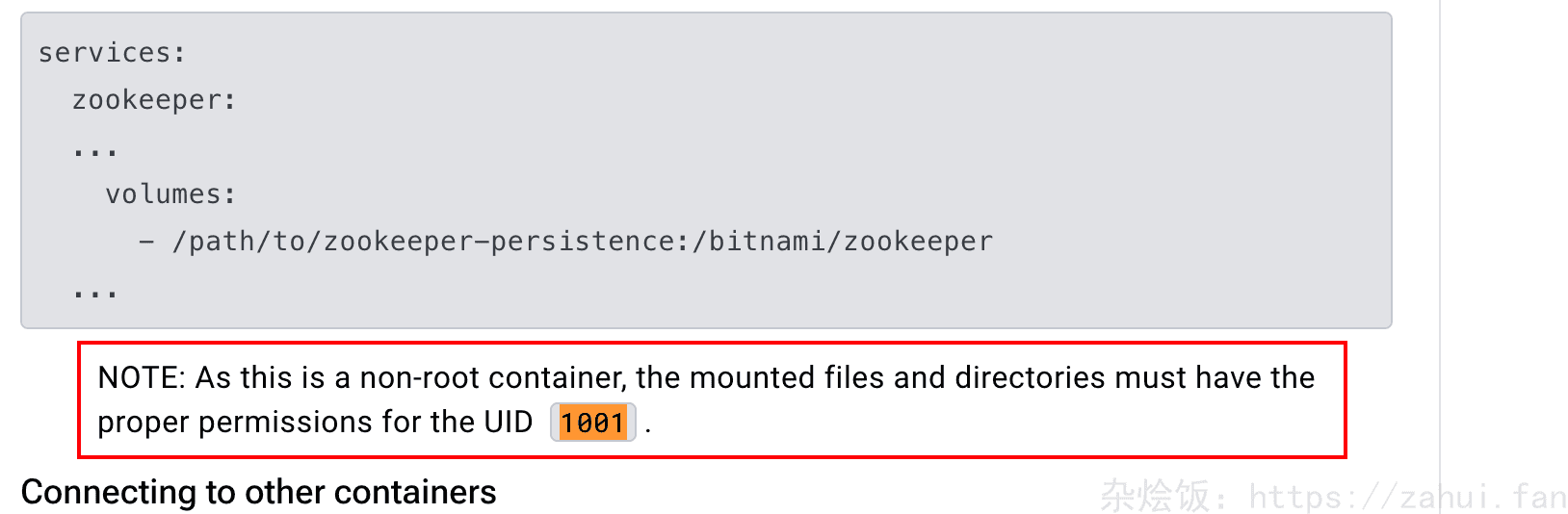

官方也有说明

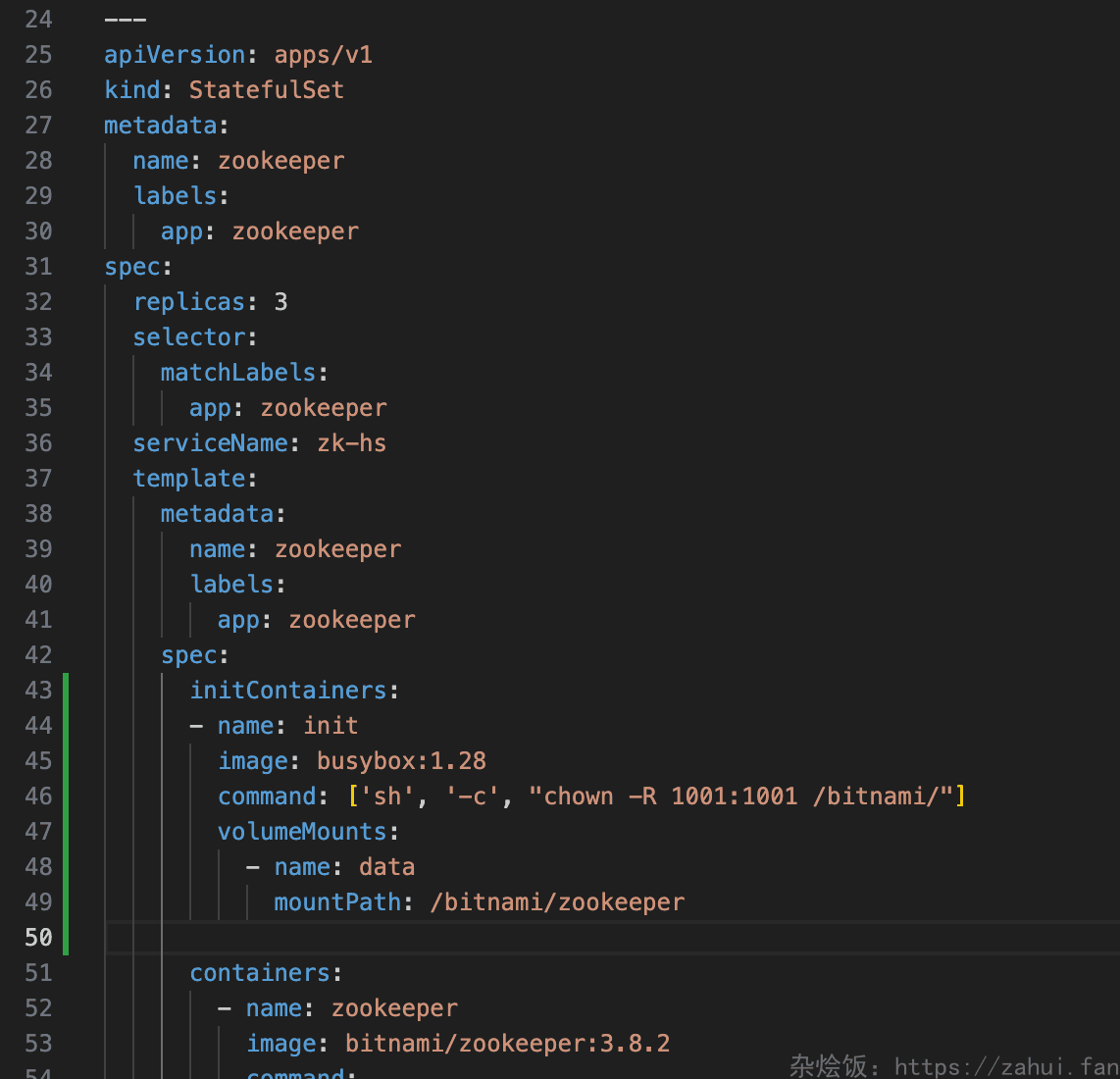

解决方法 1: 使用 initcontainer 授权

1 | initContainers: |

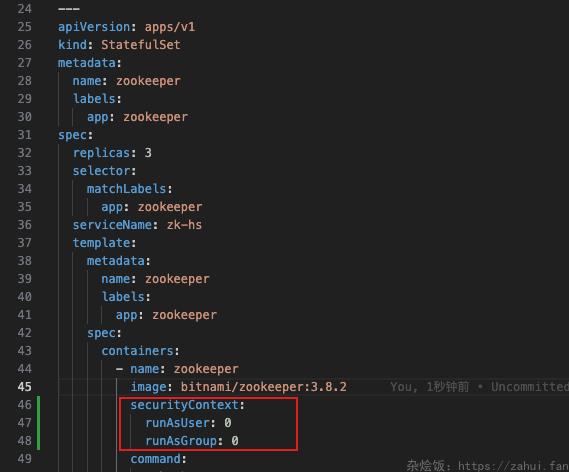

解决方法 2: 增加安全上下文,使用 root 用户

这么做会降低容器的安全性,不推荐!

1 | securityContext: |

最终的 yaml 文件

1 | apiVersion: v1 |

集群验证

1 | # 检查FQDN |

数据验证

1 | kubectl run --rm -it zookeeper-client --image=zookeeper:3.8.2 -- bash |

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来源 杂烩饭!

评论